Welcome to Hosting tutorial for you lab

Please make sure to read hardware requirement before proceeding to this page.

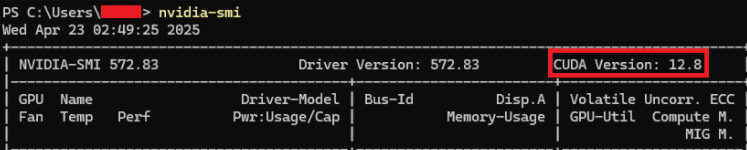

- After clone Hosting Branch to your computer please verify your NVIDIA gpu is supporting CUDA by using this cmd in terminal/cmd below:

nvidia-smi

- Update your GPU diver if needed. please look for you GPU driver and update here.

- Hosting branch is already configured to run the AI model and Embedding model with all visible GPUs. If you wish to change this setting modify this file:

docker-compose.ymlunderbackendandollamaservice.

-

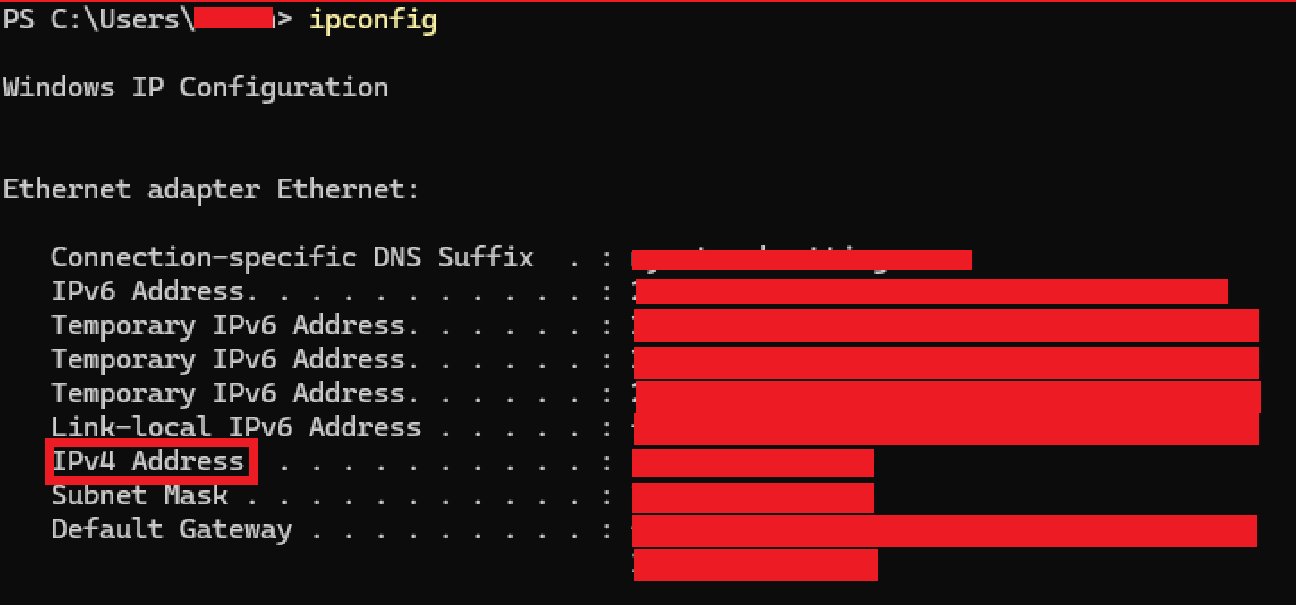

Next check your local IP address using this cmd

ipconfigin terminal and look for IPv4 Address:

-

Change your app hosting IP address to make it visible for all local network user.

-

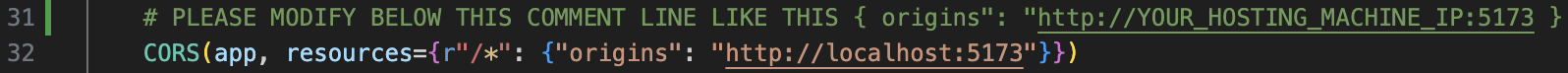

After getting your local hosting device IP address in step 3, please use it and modify

backend/app/__init__.pyline 31

-

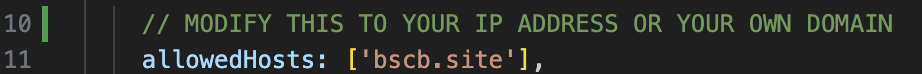

After modify your backend hosting IP please navigate to

frontend/vite.config.jsand replace localhost to your IP

- Save all of the files your have modified and open docker desktop, then run both of the command below in terminal

docker compose -f docker-compose.yml buildand wait for it to completedocker compose -f docker-compose.yml up

This is your final step, you can either keep your editor open or not